From syslog-ng Premium Edition (syslog-ng PE) version 7.0.21, you can use the the google_pubsub() destination to generate your own messaging Google Pub/Sub infrastructure with syslog-ng PE as a "Publisher" entity, utilizing the HTTP REST interface of the service.

Similarly to syslog-ng PE's stackdriver() destination, the google_pubsub() destination is an asynchronous messaging service connected to Google's infrastructure.

For more information about Google Pub/Sub's messaging service, see What Is Pub/Sub?. The rest of this section and its subsections assume that you are familiar with the Google Pub/Sub messaging service, and its concepts and terminology.

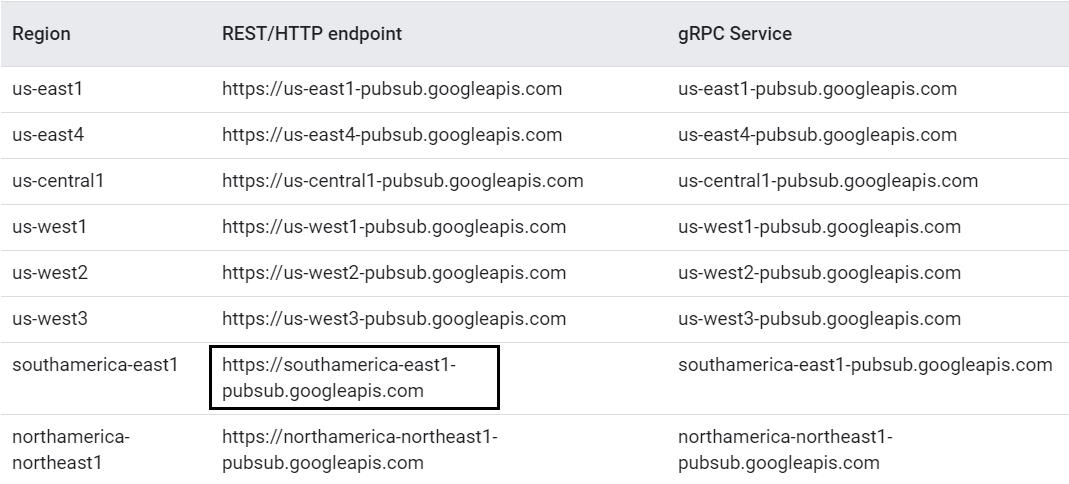

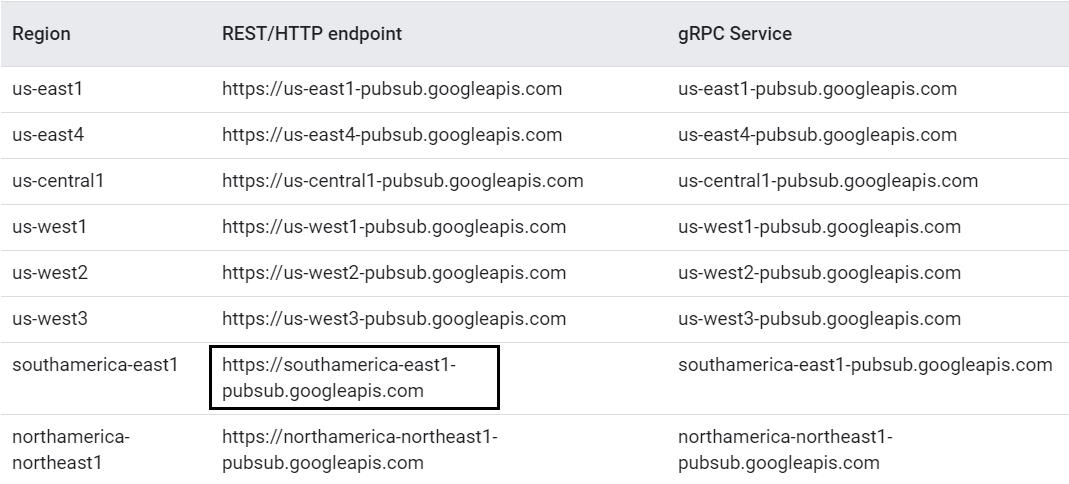

From syslog-ng PE version 7.0.24, the following endpoints are available for the google_pubsub() destination:

-

The global REST/HTTP endpoint (default)

By default, the google_pubsub() destination uses the global REST/HTTP endpoint, which automatically redirects requests to a nearby region.

The default global REST/HTTP endpoint for Google Pub/Sub services, which is also the default configuration option for the google_pubsub(), destination, is "https://pubsub.googleapis.com".

-

Your custom service endpoint (optional)

From version 7.0.24, the syslog-ng PE application supports including the service_endpoint() option to configure an additional service endpoint in addition to the default global REST/HTTP endpoint.

For more information, see the description and list of available Google Pub/Sub service endpoints.

NOTE: Configuring the service_endpoint() option for the google_pubsub(), destination is optional, and it does not affect the default global REST/HTTP endpoint in any way.

Configuring the service-endpoint() option for the google_pubsub() destination

You can configure your google_pubsub() destination to route messages to regional service endpoints in addition to the default global REST/HTTP endpoint by including the service_endpoint() option in your configuration.

Example: configuring the service_endpoint() option for the google_pubsub() destination

You can include the service_endpoint() option for the google_pubsub() destination as follows:

destination {

google_pubsub(

...

service_endpoint("https://southamerica-east1-pubsub.googleapis.com")

);

};

|

|

CAUTION: When configuring the service_endpoint()option, make sure you include the https:// part of the endpoint URL. To avoid mistakes, One Identity recommends that you copy the REST/HTTP endpoint column of your choice as-is, directly from the table of the listed available regional endpoints.

For example:

Figure 27: Available regional endpoint URLs for Google Pub/Sub

The example above illustrates the REST/HTTP endpoint URL of the southamerica-east1 region, which is the same region that is included in the configuration example. |

The following table describes the possible error messages that you may encounter while using the google_pubsub() destination.

| 400 |

"error": {

"code": 400,

"message": "The value for message_count is too large. You passed 1001 in the request, but the maximum value is 1000.",

"status": "INVALID_ARGUMENT"

} |

There are too many messages in one batch. Google Pub/Sub allows maximum 1000 messages per batch. |

Decrease the value of the batch-lines() option if you modified it previously. |

| 400 |

"error": {

"code": 400,

"message": "Request payload size exceeds the limit: 10485760 bytes.",

"status": "INVALID_ARGUMENT"

} |

The batch size is too large. Google Pub/Sub allows maximum 10MB per batch. |

To overcome the issue, try one of the following methods:

|

| 403 |

"error": {

"code": 403,

"message": "User not authorized to perform this action.",

"status": "PERMISSION_DENIED"

} |

One of the following possible reasons behind the error message:

- Wrong credentials.

- Insufficient permissions.

|

To overcome the issue, try one of the following methods:

- Check your credentials .JSON file that you downloaded from the UI of Google Pub/Sub.

- Check the associated "roles" of your service account. The google_pubsub() destination requires the "Pub/Sub Publisher" role to operate.

|

| 404 |

"error": {

"code": 404,

"message": "Requested project not found or user does not have access to it (project=YOUR_PROJECT). Make sure to specify the unique project identifier and not the Google Cloud Console display name.",

"status": "NOT_FOUND"

} |

You have specified an incorrect project ID. The string YOUR_PROJECT is the project name provided in the configuration. and the project name you have to specify. |

The project name you can find on the Pub/Sub UI is not necessarily the same as the project ID you specified in the YOUR_PROJECT string in your configuration. Make sure you use the project name provided in the YOUR_PROJECT string in your configuration. |

| 404 |

"error": {

"code": 404,

"message": "Resource not found (resource=YOUR_TOPIC).",

"status": "NOT_FOUND"

} |

You have specified an incorrect topic ID. The string YOUR_TOPIC is the topic ID you provided in the configuration, and the topic ID you have to specify. |

Make sure you use the topic ID you provided in the YOUR_TOPIC string in the configuration, and make sure that you have sufficient permissions to access it. |

|

429 |

"error": { "code": 429, "message": "Quota exceeded for quota metric 'Regional publisher throughput, kB' and limit 'Regional publisher throughput, kB per minute per region' of service 'pubsubgoogleapiscom' for consumer 'project_number:127287437417'", "status": "RESOURCE_EXHAUSTED"}

|

This error indicates that you have exceeded the quota for the given Google Cloud project. |

Review your Google Cloud project's quota and adjust it according to Google's documentation if necessary. |

Starting with version 5.3, syslog-ng PE can send plain-text log files to the Hadoop Distributed File System (HDFS), allowing you to store your log data on a distributed, scalable file system. This is especially useful if you have huge amount of log messages that would be difficult to store otherwise, or if you want to process your messages using Hadoop tools (for example, Apache Pig).

NOTE: To use this destination, syslog-ng Premium Edition (syslog-ng PE) must run in server mode. Typically, only the central syslog-ng PE server uses this destination. For more information on the server mode, see Server mode.

Note the following limitations when using the syslog-ng PEhdfs destination:

-

This destination is only supported on the Linux platforms that use the linux glibc2.11 installer, including: Red Hat ES 7, Ubuntu 14.04 (Trusty Tahr).

-

Since syslog-ng PE uses the official Java HDFS client, the hdfs destination has significant memory usage (about 400MB).

-

NOTE: You cannot set when log messages are flushed. Hadoop performs this action automatically, depending on its configured block size, and the amount of data received. There is no way for the syslog-ng PE application to influence when the messages are actually written to disk. This means that syslog-ng PE cannot guarantee that a message sent to HDFS is actually written to disk. When using flow-control, syslog-ng PE acknowledges a message as written to disk when it passes the message to the HDFS client. This method is as reliable as your HDFS environment.

-

The log messages of the underlying client libraries are available in the internal() source of syslog-ng PE.

NOTE: The hdfs destination has been tested with Hortonworks Data Platform.

Declaration

@module mod-java

@include "scl.conf"

hdfs(

client-lib-dir("/opt/syslog-ng/lib/syslog-ng/java-modules/:<path-to-preinstalled-hadoop-libraries>")

hdfs-uri("hdfs://NameNode:8020")

hdfs-file("<path-to-logfile>")

);

Example: Storing logfiles on HDFS

The following example defines an hdfs destination using only the required parameters.

@module mod-java

@include "scl.conf"

destination d_hdfs {

hdfs(

client-lib-dir("/opt/syslog-ng/lib/syslog-ng/java-modules/:/opt/hadoop/libs")

hdfs-uri("hdfs://10.140.32.80:8020")

hdfs-file("/user/log/logfile.txt")

);

};

NOTE: If you delete all Java destinations from your configuration and reload syslog-ng, the JVM is not used anymore, but it is still running. If you want to stop JVM, stop syslog-ng and then start syslog-ng again.

The following describes how to send messages from syslog-ng PE to HDFS.

To send messages from syslog-ng PE to HDFS

-

If you want to use the Java-based modules of syslog-ng PE (for example, the Elasticsearch, HDFS, or Kafka destinations), download and install the Java Runtime Environment (JRE), 1.8.

The Java-based modules of syslog-ng PE are tested and supported when using the Oracle implementation of Java. Other implementations are untested and unsupported, they may or may not work as expected.

-

Download the Hadoop Distributed File System (HDFS) libraries (version 2.x) from http://hadoop.apache.org/releases.html.

-

Extract the HDFS libraries into a target directory (for example, /opt/hadoop/lib/), then execute the classpath command of the hadoop script: bin/hdfs classpath

Use the classpath that this command returns in the syslog-ng PE configuration file, in the client-lib-dir() option of the HDFS destination.

-

Remove all log4j and slf4j-log4j12 files from hadoop library, and download log4j-slf4j-impl (https://mvnrepository.com/artifact/org.apache.logging.log4j/log4j-slf4j-impl/2.17.1) with matching version of log4j2 found in syslog-ng premium edition. The log4j-slf4j-impl should be in one of the directories bin/hdfs classpath scripts returned.