Log paths

Log paths determine what happens with the incoming log messages. Messages coming from the sources listed in the log statement and matching all the filters are sent to the listed destinations.

To define a log path, add a log statement to the syslog-ng configuration file using the following syntax:

Declaration

log {

source(s1); source(s2); ...

optional_element(filter1|parser1|rewrite1);

optional_element(filter2|parser2|rewrite2);

...

destination(d1); destination(d2); ...

flags(flag1[, flag2...]);

};

|

|

Caution:

Log statements are processed in the order they appear in the configuration file, thus the order of log paths may influence what happens to a message, especially when using filters and log flags. |

NOTE: The order of filters, rewriting rules, and parsers in the log statement is important, as they are processed sequentially.

Example: A simple log statement

The following log statement sends all messages arriving to the localhost to a remote server.

source s_localhost {

network(

ip(127.0.0.1)

port(1999)

);

};

destination d_tcp {

network("10.1.2.3"

port(1999)

localport(999)

);

};

log {

source(s_localhost);

destination(d_tcp);

};

All matching log statements are processed by default, and the messages are sent to every matching destination by default. So a single log message might be sent to the same destination several times, provided the destination is listed in several log statements, and it can be also sent to several different destinations.

This default behavior can be changed using the flags() parameter. Flags apply to individual log paths, they are not global options. For details and examples on the available flags, see Log path flags. The effect and use of the flow-control flag is detailed in Managing incoming and outgoing messages with flow-control.

Embedded log statements

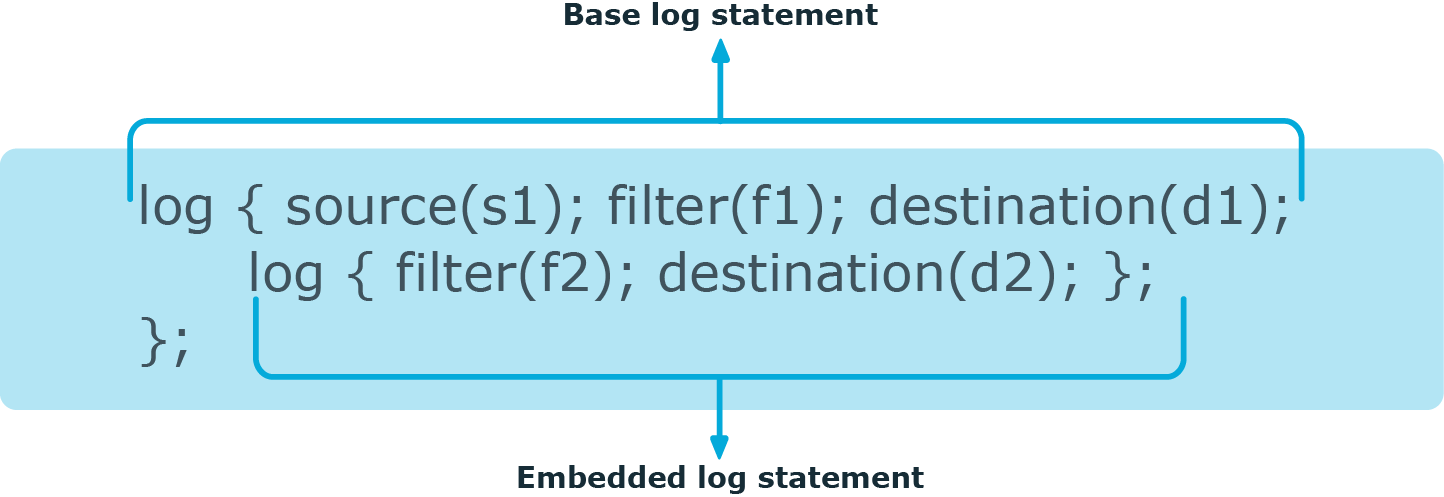

Starting from version 3.0, syslog-ng can handle embedded log statements (also called log pipes). Embedded log statements are useful for creating complex, multi-level log paths with several destinations and use filters, parsers, and rewrite rules.

For example, if you want to filter your incoming messages based on the facility parameter, and then use further filters to send messages arriving from different hosts to different destinations, you would use embedded log statements.

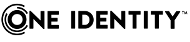

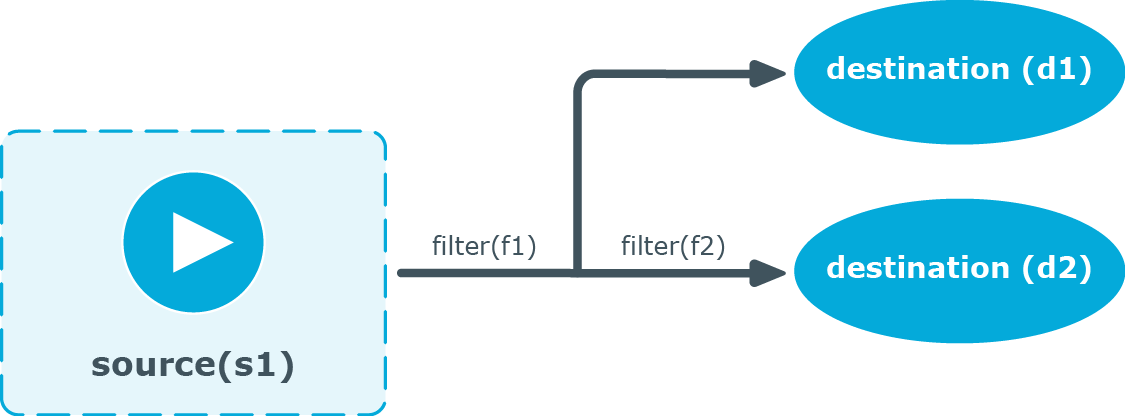

Figure 12: Embedded log statement

Embedded log statements include sources — and usually filters, parsers, rewrite rules, or destinations — and other log statements that can include filters, parsers, rewrite rules, and destinations. The following rules apply to embedded log statements:

-

Only the beginning (also called top-level) log statement can include sources.

-

Embedded log statements can include multiple log statements on the same level (that is, a top-level log statement can include two or more log statements).

-

Embedded log statements can include several levels of log statements (that is, a top-level log statement can include a log statement that includes another log statement, and so on).

-

After an embedded log statement, you can write either another log statement, or the flags() option of the original log statement. You cannot use filters or other configuration objects. This also means that flags (except for the flow-control flag) apply to the entire log statement, you cannot use them only for the embedded log statement.

-

Embedded log statements that are on the same level receive the same messages from the higher-level log statement. For example, if the top-level log statement includes a filter, the lower-level log statements receive only the messages that pass the filter.

Figure 13: Embedded log statements

Embedded log filters can be used to optimize the processing of log messages, for example, to re-use the results of filtering and rewriting operations.

Using embedded log statements

Embedded log statements (for details, see Embedded log statements) re-use the results of processing messages (for example, the results of filtering or rewriting) to create complex log paths. Embedded log statements use the same syntax as regular log statements, but they cannot contain additional sources. To define embedded log statements, use the following syntax:

log {

source(s1); source(s2); ...

optional_element(filter1|parser1|rewrite1);

optional_element(filter2|parser2|rewrite2);

...

destination(d1); destination(d2); ...

#embedded log statement

log {

optional_element(filter1|parser1|rewrite1);

optional_element(filter2|parser2|rewrite2);

...

destination(d1); destination(d2); ...

#another embedded log statement

log {

optional_element(filter1|parser1|rewrite1);

optional_element(filter2|parser2|rewrite2);

...

destination(d1); destination(d2); ...

};

};

#set flags after the embedded log statements

flags(flag1[, flag2...]);

};

Example: Using embedded log paths

The following log path sends every message to the configured destinations: both the d_file1 and the d_file2 destinations receive every message of the source.

log {

source(s_localhost);

destination(d_file1);

destination(d_file2);

};

The next example is equivalent to the one above, but uses an embedded log statement.

log {

source(s_localhost);

destination(d_file1);

log {

destination(d_file2);

};

};

The following example uses two filters:

-

messages coming from the host 192.168.1.1 are sent to the d_file1 destination, and

-

messages coming from the host 192.168.1.1 and containing the string example are sent to the d_file2 destination.

log {

source(s_localhost);

filter {

host(192.168.1.1);

};

destination(d_file1);

log {

message("example");

destination(d_file2);

};

};

The following example collects logs from multiple source groups and uses the source() filter in the embedded log statement to select messages of the s_network source group.

log {

source(s_localhost);

source(s_network);

destination(d_file1);

log {

filter {

source(s_network);

};

destination(d_file2);

};

};

if-else-elif: Conditional expressions

You can use if {}, elif {}, and else {} blocks to configure conditional expressions.

Conditional expressions' format

Conditional expressions have two formats:

-

Explicit filter expression:

if (message('foo')) {

parser { date-parser(); };

} else {

...

};

This format only uses the filter expression in if(). If if does not contain 'foo', the else branch is taken.

The else{} branch can be empty, you can use it to send the message to the default branch.

-

Condition embedded in the log path:

if {

filter { message('foo')); };

parser { date-parser(); };

} else {

...

};

This format considers all filters and all parsers as the condition, combined. If the message contains 'foo' and the date-parser() fails, the else branch is taken. Similarly, if the message does not contain 'foo', the else branch is taken.

Using the if {} and else {} blocks in your configuration

You can copy-paste the following example and use it as a template for using the if {} and else {} blocks in your configuration.

Example for using the if {} and else {} blocks in your configuration

The following configuration can be used as a template for using the if {} and else {} blocks:

log{

source { example-msg-generator(num(1) template("...,STRING-TO-MATCH,..."));};

source { example-msg-generator(num(1) template("...,NO-MATCH,..."));};

if (message("STRING-TO-MATCH"))

{

destination { file(/dev/stdout template("matched: $MSG\n") persist-name("1")); };

}

else

{

destination { file(/dev/stdout template("unmatched: $MSG\n") persist-name("2")); };

};

};

The configuration results in the following console printout:

matched: ...,STRING-TO-MATCH,...

unmatched: ...,NO-MATCH,...

An alternative, less straightforward way to implement conditional evaluation is to use junctions. For details on junctions and channels, see Junctions and channels.