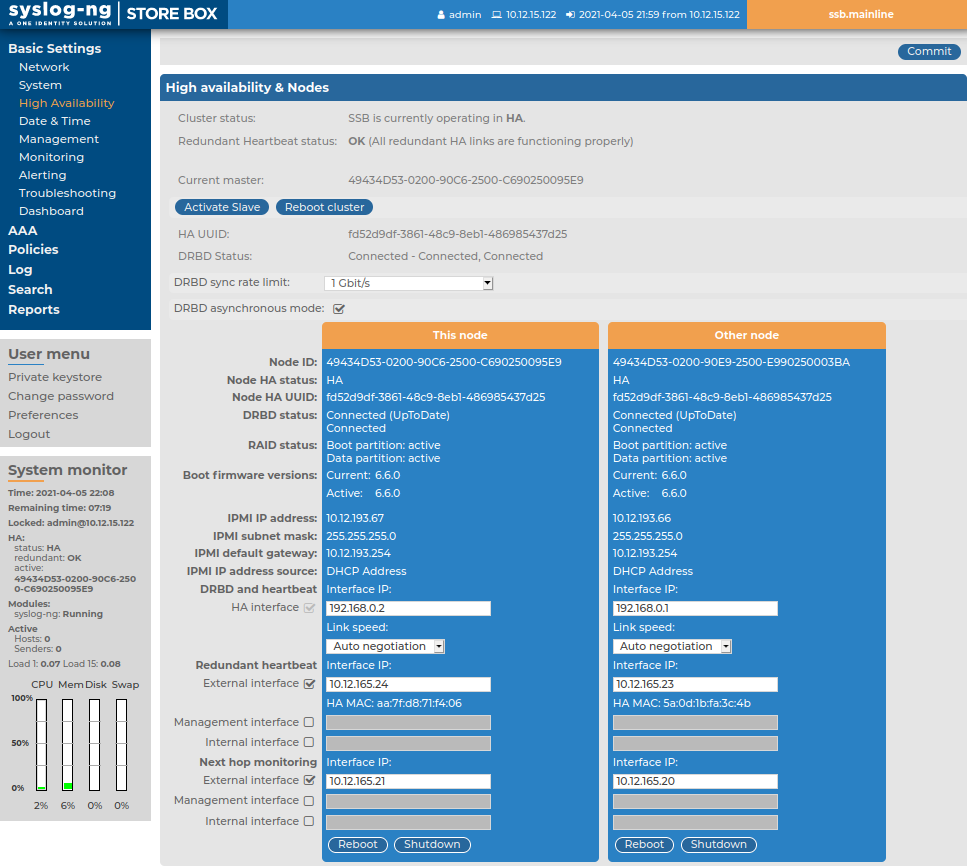

Controlling SSB: restart, shutdown

To restart or shut down syslog-ng Store Box (SSB), navigate to Basic Settings > System > System control and click the respective action button. The Other node refers to the slave node of a high availability SSB cluster. For details on high availability clusters, see Managing a high availability SSB cluster.

|

|

Caution:

-

When rebooting the nodes of a cluster, reboot the other (slave) node first to avoid unnecessary takeovers.

-

When shutting down the nodes of a cluster, shut down the other (slave) node first. When powering on the nodes, start the master node first to avoid unnecessary takeovers.

-

When both nodes are running, avoid interrupting the connection between the nodes: do not unplug the Ethernet cables, reboot the switch or router between the nodes (if any), or disable the HA interface of SSB. |

Figure 84: Basic Settings > System > System control — Performing basic management

NOTE: Web sessions to the SSB interface are persistent and remain open after rebooting SSB, so you do not have to relogin after a reboot.

Managing a high availability SSB cluster

High availability (HA) clusters can stretch across long distances, such as nodes across buildings, cities or even continents. The goal of HA clusters is to support enterprise business continuity by providing location-independent failover and recovery.

To set up a high availability cluster, connect two syslog-ng Store Box (SSB) units with identical configurations in high availability mode. This creates a primary-secondary node pair.

NOTE: Primary and secondary nodes have the following functions, and are sometimes referred to, and displayed, as follows:

-

Primary node: Functions as the active node in the HA cluster. Sometimes the primary node is also referred to as the master node. On the Basic Settings > High Availability > High availability & Nodes page, the primary node is displayed as This node.

-

Secondary node: Functions as the backup node in the HA cluster. Sometimes the secondary node is also referred to as the slave node. On the Basic Settings > High Availability > High availability & Nodes page, the primary node is displayed as Other node

Should the primary node stop functioning, the secondary node takes over the functionality of the primary node. This way, the SSB servers are continuously accessible.

NOTE: To use the management interface and high availability mode together, connect the management interface of both SSB nodes to the network, otherwise you will not be able to access SSB remotely when a takeover occurs.

The primary node shares all data with the secondary node using the HA network interface (labeled as 4 or HA on the SSB appliance). The disks of the primary and the secondary node must be synchronized for the HA support to operate correctly. Interrupting the connection between running nodes (unplugging the Ethernet cables, rebooting a switch or a router between the nodes, or disabling the HA interface) disables data synchronization and forces the secondary node to become active. This might result in data loss. You can find instructions to resolve such problems and recover an SSB cluster in Troubleshooting an SSB cluster.

NOTE: HA functionality was designed for physical SSB units. If SSB is used in a virtual environment, use the fallback functionalities provided by the virtualization service instead.

On virtual SSB appliances, or if you have bought a physical SSB appliance without the high availability license option, the Basic Settings > High Availability menu item is not displayed anymore.

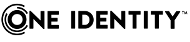

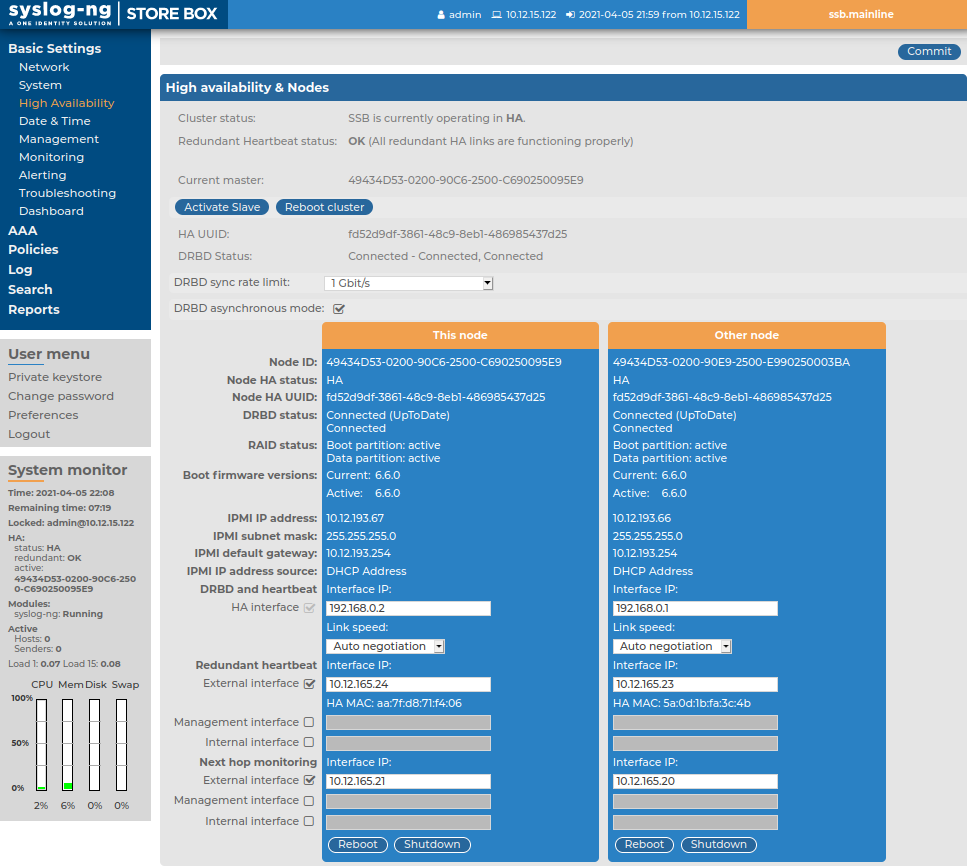

The Basic Settings > High Availability page provides information about the status of the HA cluster and its nodes.

Figure 85: Basic Settings > High Availability — Managing a high availability cluster

Information about the HA cluster

The following information is available about the cluster:

-

Cluster status: Indicates whether the SSB nodes recognize each other properly and whether those are configured to operate in high availability mode.

You can find the description of each HA status in Understanding SSB cluster statuses.

-

Redundant Heartbeat status: Indicates whether the redundant HA links in your HA cluster are functioning properly.

-

Current master: The MAC address of the high availability interface (4 or HA) of the node.

-

HA UUID: A unique identifier of the HA cluster. Only available in High Availability mode.

-

DRBD status: Indicates whether the SSB nodes recognize each other properly and whether those are configured to operate in high availability mode.

You can find the description of each DRBD status in Understanding SSB cluster statuses.

-

DRBD sync rate limit: The maximum allowed synchronization speed between the master and the slave node.

You can find more information about configuring the DRBD sync rate limit in Adjusting the synchronization speed.

-

DRBD asynchronous mode: Enable to compensate for high network latency and bursts of high activity by enabling asynchronous data replication between the primary and the secondary node.

You can find more information about configuring asynchronous data replication in Asynchronous data replication.

Information about the HA nodes

The active (primary) SSB node is labeled as This node. This unit receives the incoming log messages and provides the web interface. The SSB unit labeled as Other node is the secondary node that is activated if the primary node becomes unavailable.

The following information is available about each node:

-

Node ID: The universally unique identifier (UUID) of the physical or virtual machine.

NOTE: Due to backward compatibility, in the case of upgrades, the Node ID is the MAC address of the node's HA interface.

For SSB clusters, the IDs of both nodes are included in the internal log messages of SSB.

-

Node HA status: Indicates whether the SSB nodes recognize each other properly and whether those are configured to operate in high availability mode.

You can find the description of each HA status in Understanding SSB cluster statuses.

-

Node HA UUID: A unique identifier of the cluster. It is a software-generated identifier. Only available in High Availability mode.

-

DRBD status: The status of data synchronization between the nodes.

You can find the description of each DRBD status in Understanding SSB cluster statuses.

-

RAID status: The status of the RAID device of the node.

-

Boot firmware versions: The version numbers of the boot firmware.

You can find more information about the boot firmware in Firmware in SSB.

-

IPMI IP address: The node's IPMI IP address.

-

IPMI subnet mask:The node's IPMI subnet mask.

-

IPMI default gateway: The node's IPMI default gateway.

-

IPMI IP address source: The node's IPMI IP address source.

-

DRBD and heartbeat: You can enable and configure the following options for DRBD and heartbeat monitoring:

-

HA Interface: Enable to customize the IP address of the high availability (HA) interface.

NOTE: You can only modify the IP address in the Interface IP field when your HA cluster is in STANDALONE or in HA state.

-

Link speed: The maximum allowed speed between the primary and the secondary node. The HA link's speed must exceed the DRBD sync rate limit, else the web UI might become unresponsive and data loss can occur.

Leave this field on Auto negotiation unless specifically requested by the support team.

-

Redundant heartbeat:

You can enable and configure the following:

-

External interface: Enable to set the Interface IP address that you want to use for redundant heartbeat monitoring on the node's external interface.

-

Management interface: Enable to set the Interface IP address that you want to use for redundant heartbeat monitoring on the node's management interface.

-

Internal interface: Enable to set the Interface IP address that you want to use for redundant heartbeat monitoring on the node's internal interface..

You can find more information about configuring redundant heartbeat interfaces in Redundant heartbeat interfaces.

-

Next hop monitoring: The interfaces you enable and configure here are virtual interfaces, used only to detect that the other node is still available. The interfaces you enable here are not used to synchronize data between the nodes, and only heartbeat messages are transferred.

You can enable and configure the following:

-

External interface: Enable to set the Interface IP address that you want to use for next hop monitoring on the node's external interface.

-

Management interface: Enable to set the Interface IP address that you want to use for next hop monitoring on the node's management interface.

-

Internal interface: Enable to set the Interface IP address that you want to use for next hop monitoring on the node's internal interface.

You can find more information about configuring next hop monitoring inNext-hop router monitoring.

Configuration and management options for HA clusters

The following configuration and management options are available for HA clusters:

-

Set up a high availability cluster: You can find detailed instructions for setting up a HA cluster in Installing two SSB units in HA mode in the Installation Guide.

-

Adjust the DRBD (primary-secondary) synchronization speed: You can change the limit of the DRBD synchronization rate.

You can find more information about configuring the DRBD synchronization speed in Adjusting the synchronization speed.

-

Enable asynchronous data replication: You can compensate for high network latency and bursts of high activity by enabling asynchronous data replication between the primary and the secondary node with the DRBD asynchronous mode option.

You can find more information about configuring asynchronous data replication in Asynchronous data replication.

-

Configure redundant heartbeat interfaces: You can configure virtual interfaces for each HA node to monitor the availability of the other node.

You can find more information about configuring redundant heartbeat interfaces in Redundant heartbeat interfaces.

-

Configure next-hop monitoring: You can provide IP addresses (usually next hop routers) to continuously monitor from both the primary and the secondary nodes using ICMP echo (ping) messages. If any of the monitored addresses becomes unreachable from the primary node while being reachable from the secondary node (in other words, more monitored addresses are accessible from the secondary node) then it is assumed that the primary node is unreachable and a forced takeover occurs – even if the primary node is otherwise functional.

You can find more information about configuring next-hop monitoring in Next-hop router monitoring.

-

Reboot the HA cluster: To reboot both nodes, click Reboot Cluster. To prevent takeover, a token is placed on the secondary node. While this token persists, the secondary node halts its boot process to make sure that the primary node boots first. Following reboot, the primary node removes this token from the secondary node, allowing it to continue with the boot process.

If the token still persists on the secondary node following reboot, the Unblock Slave Node button is displayed. Clicking the button removes the token, and reboots the secondary node.

-

Reboot a node: Reboots the selected node.

When rebooting the nodes of a cluster, reboot the other (secondary) node first to avoid unnecessary takeovers.

-

Shutdown a node: Forces the selected node to shutdown.

When shutting down the nodes of a cluster, shut down the other (secondary) node first. When powering on the nodes, start the primary node first to avoid unnecessary takeovers.

-

Manual takeover: To activate the other node and disable the currently active node, click Activate slave.

Activating the secondary node terminates all connections of SSB and might result in data loss. The secondary node becomes active after about 60 seconds, during which SSB cannot accept incoming messages. Enable disk-buffering on your syslog-ng clients and relays to prevent data loss in such cases.

Adjusting the synchronization speed

When operating two syslog-ng Store Box (SSB) units in High Availability mode, every incoming data copied from the master (active) node to the slave (passive) node. Since synchronizing data can take up significant system-resources, the maximal speed of the synchronization is limited, by default, to 10 Mbps. However, this means that synchronizing large amount of data can take very long time, so it is useful to increase the synchronization speed in certain situations — for example, when synchronizing the disks after converting a single node to a high availability cluster.

The Basic Settings > High Availability > DRBD status field indicates whether the latest data (including SSB configuration, log files, and so on) is available on both SSB nodes. For a description of each possible status, see Understanding SSB cluster statuses.

To change the limit of the DRBD synchronization rate, navigate to Basic Settings > High Availability, select DRBD sync rate limit, and select the desired value.

Set the sync rate carefully. A high value is not recommended if the load of SSB is very high, as increasing the resources used by the synchronization process may degrade the general performance of SSB. On the other hand, the HA link's speed must exceed the speed of the incoming logs, else the web UI might become unresponsive and data loss can occur.

If you experience bursts of high activity, consider turning on asynchronous data replication.

Asynchronous data replication

When a high availability syslog-ng Store Box (SSB) cluster is operating in a high-latency environment or during brief periods of high load, there is a risk of slowness, latency or package loss. To manage this, you can compensate latency with asynchronous data replication.

Asynchronous data replication is a method where local write operations on the primary node are considered complete when the local disk write is finished and the replication packet is placed in the local TCP send buffer. It does not impact application performance, and tolerates network latency, allowing the use of physically distant storage nodes. However, because data is replicated at some point after local acknowledgement, the remote storage nodes are slightly out of step: if the local node at the primary data center breaks down, data loss occurs.

To turn asynchronous data replication on, navigate to Basic Settings > High Availability, and enable DRBD asynchronous mode. You must reboot the cluster (click Reboot cluster) for the change to take effect.

Under prolonged heavy load, asynchronous data replication might not be able to compensate for latency or for high packet loss ratio (over 1%). In this situation, stopping the slave machine is recommended to avoid data loss at the temporary expense of redundancy.